CMS12 - What do you do if/when Find is unavailable or slow?

There could be a number of reasons for search and navigation being slow.

- Clients are pretty much always on a shared cluster with other clients. This means other clients and load on the cluser can impact, when you see slowness raise tickets and also raise one to equire about the setup of your clients index so you are aware how many you're sharing with and if there's been any impact on that cluster

- Make sure you've optimized your queries around comoon issues

As mentioned in the link QPS is a big deal. It's often the limiting factor and having a whole site built around search can impact if there's large amounts of calls. You can check application insight to see if you're hitting QPS limits but if you do core functions won't work. Also this affects indexing calls so make sure you're not customizing or doing things with indexing that would trigger excessive calls. Where possible if you can remove the dependency on search and navigation to IContentLoader if you have small sets of data

The specific error there however with initialization could be a problem with your models or indexing.

I would suggest if you're having issues making sure to clear your index and re-index and also check the underlying errors for any signs of seralization issues on properties.

Ok, my bad for describing the issue wrong ; D

In CMS 11 we have implemented a solution for when Find is down; we're either not showing the content or are taking it directly from the cache/database. Buttom line, our site does not break.

Now we cannot even start the solution if Find is down (down - IP blocked or wrong index name). (tested locally and on integration)

Has there been some change?

Today neither I nor colleagues can start the solution locally, getting error

We have each our own index.

Back to the initial question, Can the project be started without Find? : D

Hi EVT

Have you tried this setting? From memory, this setting should let you bypass the error during the startup

"FindCommerce": {

"IgnoreWebExceptionOnInitialization": true

},

IgnoreWebExceptionOnInitialization was a workaround added to soften the issue, but in retrospect it is not a good one, as any nested convention would be lost for that.

@EVT what is the full stacktrace? what is throwing the exception ?

Thanks @Quang I didn't realize that setting will impact nested convention. I will note it down.

@Evt, the project can be started without Search & Nav (Formerly known as Find). I have no issue with that in CMS 12 for any project I created. You need to make sure not install "Find" related package in your solution if you're not using them.

I think the way you try to similuate the Seach & Nav failure is not quite right. Seach & Nav both index name and location are managed in DXP environment, if IP got blocked, it will be infrastructure level impact not specific to your project, Optimizely managed service will be your first point of contact. Regarding to incorrect index name issue, it's not likely happened as the index name/url are managed in Azure Portal, you won't have permission to change it.

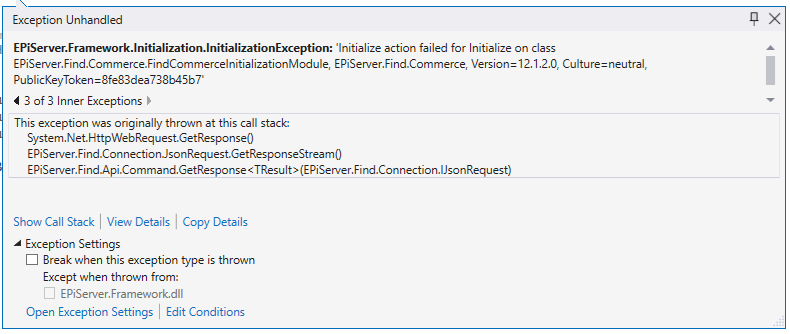

Received an email that my index has expired a moment ago, started the solution, instant exception.

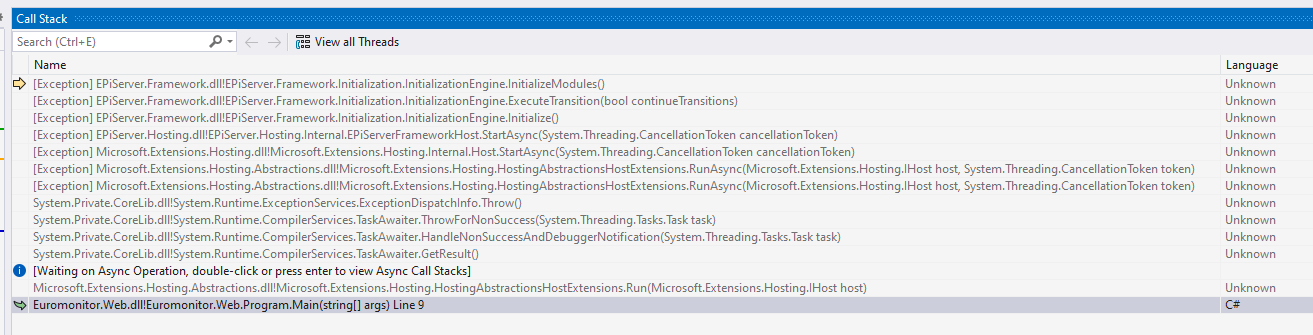

The exception is being thrown by Main method in Program.cs. This is the Call Stack

Exception:

System.ApplicationException: Could not apply catalog content conventions.

The content indexed during this session will not contain important additional fields and searches might work incorrectly, until the site restarts and the conventions are applied successfully.

We recommend to fix the following exception, but if you are certain this exception can be safely ignored, add 'episerver:findcommerce.IgnoreWebExceptionOnInitialization' key with value = true to appSettings.

---> EPiServer.Find.ServiceException: The remote server returned an error: (401) Unauthorized.

Unauthorized

---> System.Net.WebException: The remote server returned an error: (401) Unauthorized.

at System.Net.HttpWebRequest.GetResponse()

at EPiServer.Find.Connection.JsonRequest.GetResponseStream()

at EPiServer.Find.Api.Command.GetResponse[TResult](IJsonRequest request)

--- End of inner exception stack trace ---

at EPiServer.Find.Api.Command.GetResponse[TResult](IJsonRequest request)

at EPiServer.Find.Api.GetMappingCommand.Execute()

at EPiServer.Find.ClientConventions.NestedConventions.AddNestedType(IEnumerable`1 declaringTypes, String name)

at EPiServer.Find.ClientConventions.NestedConventions.MarkAllImplementationsAsNested(Type declaringType, String name)

at EPiServer.Find.ClientConventions.NestedConventions.ForInstancesOf[TSource,TListItem](Expression`1 exp)

at EPiServer.Find.ClientConventions.NestedConventionItemInstanceWrapper`1.Add[TListItem](Expression`1 expr)

at EPiServer.Find.Commerce.CatalogContentClientConventions.ApplyNestedConventions(NestedConventions nestedConventions)

at EPiServer.Find.Commerce.CatalogContentClientConventions.ApplyConventions(IClientConventions clientConventions)

at Web.Infrastructure.Initialization.CatalogContentIndexConventions.ApplyConventions(IClientConventions clientConventions) in C:\Repositories\Episerver\Web\Infrastructure\Initialization\CatalogContentIndexConventions.cs:line 27

at EPiServer.Find.Commerce.FindCommerceInitializationModule.Initialize(InitializationEngine context)

--- End of inner exception stack trace ---

at EPiServer.Find.Commerce.FindCommerceInitializationModule.HandleInitializationException(Exception exception, Boolean ignoreInitializationError)

at EPiServer.Find.Commerce.FindCommerceInitializationModule.Initialize(InitializationEngine context)

at EPiServer.Framework.Initialization.Internal.ModuleNode.<>c__DisplayClass4_0.

Yes, as it said, the index expired and you are getting 401 as the "key" is no longer valid. the exception message is quite clear

We recommend to fix the following exception, but if you are certain this exception can be safely ignored, add 'episerver:findcommerce.IgnoreWebExceptionOnInitialization' key with value = true to appSettings.

Hi EVT

Your index won't be expired for licensed environment (e.g DXP or if you purchased search nav license). For demo license, you'll encounter this issue when it's expired, hence I suggested you in previous response to add ignore key setting.

Again, this won't be something you as customer should worry that much as Optimizely owned the infrastructure and you have their SLA to cover you.

Guys, the problem is that we had a working solution for any exception related to Find, that the project should load but have different logic as to what to show and where to take it from. It was not supposed to not start, not load or crash.

After upgrade that code change is not working. This was one example, since I wanted to show the error. But we have tested with IP block or index name change; three scenarios when find is breaking but the solution should still work, it worked under those circumstances before the upgrade. Now it no longer does.

Is there a solution for the latest version of Episerver that the site would still work in case Find would be slow, timing out, server error on their side?

unfortunately no, especially if your site heavily depends on Find. You can mitigate the problem somehow by leveraging caching (both your caching and Find's internal cache), but if Find is down or performing bad, there is not much to cover it.

but remember that you are covered by SLA, in which, i believe, Find must be available (up and running and with certain level of performance) up at least 99% of the time (up to 99.7% if you use highest tier). that'd be enough to cover most of the cases. Find Service Level Agreement - Optimizely

To get back to an earlier question, is this still valid for CMS12?

We have tried to test it by changing the Index name, then by blocking the IPs and the solution does not even start, just instantly throws an exception