Speed up your site with client-side prefetched content - Part 1

By now, we all know that a critical part of any user experience is speed - how fast is your site. And we all appreciate when we are at a website and it just feels lightening fast.

Now, there are many ways to optimize sites running on Episerver to perform well; Episerver caching, Output caching, smart coding, CDN and much more (

this comes to mind, but there are many).

However, even on the most optimized sites there is still the time it gets for increasingly large websites to reach the end consumer - many of whom are still on surprisingly slow connections - with many hubs between your site and them.

You might have read about how Amazon is speeding up delivery of packages you order from them, by anticipating what you will order from them and put it on your delivery truck even before you know you want to buy it. Recently I've been investigating ways to do just that - but for web content. If we can guess what content you'll require next, we can have your browser silently preload it in the background and then display it when you need to.

My first thought was that a clever piece of javascript could anticipate the visitors moves and then fetch new content in the background ajax-style and then update the URL with pushState (some refer to this technology combo as PJAX = pushState + Ajax). Naturally it turns out that someone is already doing that, and with a simple javascript on your pages it's easy to do. http://instantclick.io/

Now, to try this out I simply started a plain vanilla Alloy site in Visual Studio running on my local machine. Obviously this caused a problem cause Episerver running locally is just do damn fast to notice any difference. So first order of business was to slow down Episerver significantly. However, this was easily done with a Thread.Sleep statement in the main Controller (kids, don't try this at home cause you'll forget to remove it).

Then it was just a question of adding the scripts to the root layout:

<script src="/static/instantclick.min.js" data-no-instant></script>

<script data-no-instant>InstantClick.init();</script>

And all of the sudden I could watch how the site started to load whatever I hovered over using Chrome developer tools.

Now, while this is really cool, it's still not completely there. First of all, loading will first begin on hover - and it 'just' replaces the html and fixes the url using javascript. Somehow that feels a bit flaky to me and scared me a little bit. And it looks like it will load the content again and again if I move in and out of hover.

So I dug a bit further...Then I realized that I have apparently been falling a bit behind in reading all the nifty details of the HTML5 spec. It turns out that this is a problem already solved in the HTML5 standard - and is heavily used by Google and the likes.

You can both "prefetch" and "preload" web content just by specifying it in HTTP headers or in a LINK tag like this:<link rel="prefetch" href="[url]">.

This way you can notify the browser of any kind of content that the user will soon be requiring and have it download it into the client cache. Note, it will only load the specific resource - not try to render it (so if it's an HTML page, it will not start to download images, style sheets and scripts just yet).

However - while not part of any official standard yet, both Chrome and IE11 now also supports prerendering, where it completely loads and renders the page - with a very similar syntax.

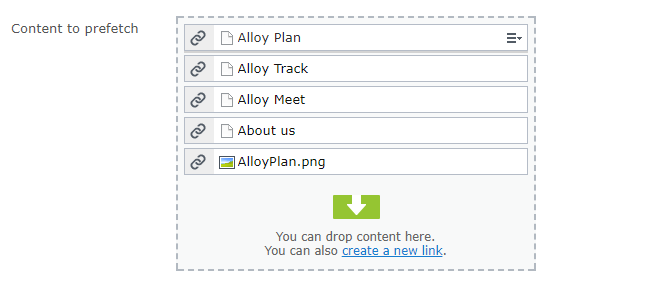

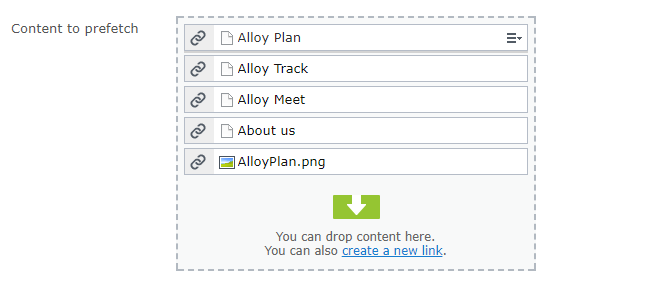

I decided to try this out and added a LinkCollection property to the Alloy base page type called 'SitePageData':

public abstract class SitePageData : PageData, ICustomCssInContentArea

{

[Display(Name="Content to prefetch",

Description ="Urls that should be prefetched for quick access - includes pages or big media assets that is expected to be shwon next" ,

GroupName = Global.GroupNames.MetaData,

Order = 200)]

public virtual EPiServer.SpecializedProperties.LinkItemCollection PrefetchUrls { get; set; }

...

}

And then all that's left to do is to make sure we render the links on the root razor layout. I do this in the <head> section:

@if (Model.CurrentPage.PrefetchUrls != null)

{

foreach (var li in Model.CurrentPage.PrefetchUrls)

{

<link rel="prefetch" href="@Url.ContentUrl(EPiServer.Web.Routing.UrlResolver.Current.Route(new EPiServer.UrlBuilder(li.Href)).ContentLink)">

<link rel="prefetch" href="@Url.ContentUrl(EPiServer.Web.Routing.UrlResolver.Current.Route(new EPiServer.UrlBuilder(li.Href)).ContentLink)">

}

}

</head>

Now, it's easy to just drag in content (pages and media files) you want to prefetch to whoever is watching your page.

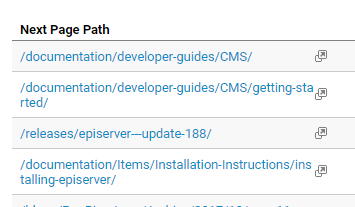

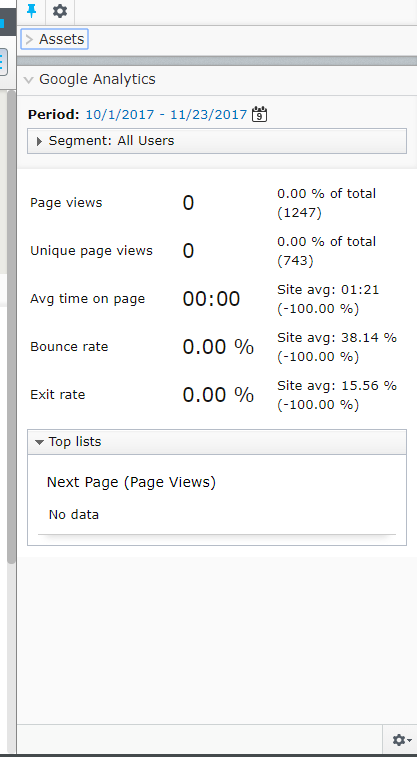

Now, obviously you are thinking "Great, but does this mean that my editors will have to guess which pages will be loaded next?" and I hear what you are saying. For starters, you can install the

Episerver Google Analytics integration. That'll give you a nice widget that'll pop up on the right side of the page with a list of "Next" pages - very similar to the list you see on Google Analytics itself.

This is how it looks in Google Analytics (data from Episerver world, Next pages after "CMS"):

And here is how it could look in your editorial interface (except that since this is a local test site with no data, my list is sadly very empty).

But why stop here? Surely we can find a way to automate this process?! Extending the Google Analytics integration and automatic these steps is something I plan to dig into when I find a moment to write Part 2 to this blog. And who knows - maybe there'll even be a github link or nuget package under the christmas tree this year...

Nov 22, 2017

Even cooler, pair this with our machine learning personalization/segmenting capabilities to prefetch what the specific user is likely to view next.

Thanks for sharing, You look the world from 4th dimension!

Nice