Migrating Episerver content with custom import processing

In this blog post I tell you how we solved with custom import processing the customers need to migrate thousand or a bit more pages (plus blocks and media data instances) from one Episerver site to another Episerver site with different content types.

Background

First a quick background to understand a bit why and what. Our team had gotten a website lets call it 'Mango' to maintenance from another Episerver partner and the site building had started around 2014 so there was some history on the implementation of the site and one could clearly see there has been different teams doing the implementation as there seemed to not be one common way how to do things but anyways. So our teams work started with getting the hang of the website implementation and at the same time continue implementing new features and doing some polishing and fixes to it. Months passed and we got a request from the project owner that we would need a new website which is a copy paste of the current website codebase and we need it yesterday ;-)

So naturally we had some discussion with the customer could they tell us more why they need a copy paste of the website and we explained that we shouldn't just copy paste blindly as we would then copy some of the not so good ideas. Also we pointed out that we will have technical dept after this in two sites and not just one. The customer couldn't tell us everything about why they need it and was sad about it at the same time but this copy paste website was supposed to be just temporary, live for 3 months or 6 months at maximum. We would only change fonts and some colors and we would get those soon from a design agency.

Well you can guess it already - the design changes were not just fonts and colors but quite big layout and grid changes compared to copied original layout - and there was the time pressure for the implementation of the "ccopy & paste" website. Anyways the team did it - we did copy paste code base but with modifications, like renaming used content types (namespace changes naturally), use feature folders, refactor configuration and settings, new layouts and styles, etc improvements.

After this "copy & paste" website was ready and testing with real content started, we were told the real reason: there was a merger and now it was official and all legal approval was ready and announced to the public. Our company 'Mango' merged with another company lets call it 'Orange' and together they were called 'Mango Orange group'. So we had created a website for this new company and mainly to contain their new joined corporate pages and financial information. All good.

All of the initial content for this new 'Mango Orange group' website was done manually as the content didn't exist on either 'Mango' or 'Orange' websites but then came the 'but' - it was decided by the new company that this copy & paste website would be the new website and we would need to develop new features there AND migrate existing content from 'Orange' website...

Content migration options

If the source websites content types are defined in separate assembly then you could simply reference that assembly in the target website solution and use Episerver built-in export and import functionality with a BUT, yes you would have the content types and the import would just work but then you would need to convert the pages, blocks and media types to 'Mango' websites content types which are the ones used. Pages can be converted out of the boxes using the admin tool but that is manual work AND IT DOESN'T convert blocks (or used media data)! Fellow EMVP Tomas Hensrud Gulla has blogged about his custom tool which can covert blocks: Convert Episerver Blocks. So even if we would do all the manual work and convert page types and block types out media data content types still would be incorrect (and in reality we had same content type names with different content guids so we were already screwed on that part) and also it would take time to do it.

My next idea was: What if we could use the Episerver import and hook to the events it exposes and modify the source content in those events to match the destination website content types. Quick proof of concept using two Ally websites with modified content types and different content types gave good results, like we can change the content type in import, we can change properties data type, we can filter out languages we don't have enabled, etc.

So we decided to go with building a custom import processing "framework" to handle the content in the import and convert it to the content types used on our "Mango Orange group" website.

How does the Episerver import flow works?

I'll keep this part intentionally on a high level (I'll try to find time to blog about this in more details in future) but on a high level this is how it goes:

- Episerver import reads the your-export-package.episerverdata package

- this really is just a zip package (you can open it for example with 7-zip) and look at the structure

- inside there is folder 'epi.fx.blob:' which child folder contains the exported blobs

- in the root there is the epix.xml

- xml file containing the exported content (basically pages and blocks with content language versions)

- this really is just a zip package (you can open it for example with 7-zip) and look at the structure

- and the import framework is triggered with the data from your package

- first the blobs are imported

- this is the binary part of the blobs, so files are copied to your target blobs container

- in the event handling you can inspect the blobs filename for example for allowed extension and cancel the import for the blob if the file extension is not allowed in the target website

- next step is to import the content

- here you can inspect the content to be imported, you get all the data about the content to be imported (Note! The event handler gets a single item when it is called and it doesn't know about other content)

- add or remove properties

- rename properties

- change propertys data type (like from Episerver Url to ContentReference, naturally only when the url was pointing to Episerver content)

- you have full control to the content type about to be imported, so can do quite many things to the data at this stage

- cancel the import of the content

- change should the 'For All Sites' be used instead of 'For This Site' (for example if images are not stored with the content 'For This Page' or 'For This Block' but in a shared location)

- check that the master language is enabled in the target website

- remove language branches that the target doesn't support

- after your code has processed the content and has not canceled the content import

- Episerver default import implementation creates the content type instance basically using the content repository GetDefault using the content type id and content master language

- Note, when Episerver is resolving the content type to create it has some fallback logic if the content to be created was not found using the content type id (property: PageTypeId)

- try to load the content type using the content type name (property: PageTypeName)

- try to load the content type using the ContentTypesMap

- try to load the content type using the content types display name

- Note, when Episerver is resolving the content type to create it has some fallback logic if the content to be created was not found using the content type id (property: PageTypeId)

- after the instance is created using IContentRepository.GetDefault method it reads the property values for the master language of the content and triggers the property import event

- most likely you don't do anything else than debug log the values here as you have most likely processed the property values already in the import content event handler

- and then Episerver import sets the instances property value to the property value from the import process

- and then the same for language branches if there were any

- Episerver default import implementation creates the content type instance basically using the content repository GetDefault using the content type id and content master language

- here you can inspect the content to be imported, you get all the data about the content to be imported (Note! The event handler gets a single item when it is called and it doesn't know about other content)

- import done

Note! When importing content to the target site you most likely should keep the "Update existing content items with matching ID" checkbox checked. Why? This also makes the import keep the same content id for the content which you will need if you are not doing the whole import for all content in one go (most likely you will do multiple imports to keep the import package size smaller and to do the migration in parts), this way your content links don't break when doing multiple import batches as Episerver uses the original content ids so the links between content keeps working because of that). There is also one cool feature or side-effect of having it checked - you could later also import a single language branch for the content from the original source and it would be connected to the already imported content because same content ids were used in target and source.

Building the custom import processing

Building blocks for our custom import processing:

- custom initializable module that hooks to the import events

- creating initialization module Episerver documentation

- import events IDataImportEvents (assembly: EPiServer.Enterprise, namespace: EPiServer.Enterprise)

- is the entry point for the whole import process

- finds the import content type processor which can handle the content and calls it with the content properties

- handles language branches also, so if the langugae branch is not supported in the target it will remove the language branch properties

- handles should a blob be imported or not (based on the blobs file extension)

- knows about the target enabled languages

- will cancel import for content which is not in the enabled languages

- if content uses 'For This Site' folder then that is switched to 'For All Sites'

- custom interface for content processors: IImportContentTypeProcessor

- the implementation is responsible to process the content

- modify the content to match the target system

- has a method: bool CanHandle(string contentTypeId);

- guid string for content type like: 9FD1C860-7183-4122-8CD4-FF4C55E096F9

- called by our initialization module when import raises the ContentImporting event to find a processor that can handle the content

- has a methd: bool ProcessMasterLanguageProperties(Dictionary<string, RawProperty> rawProperties);

- has a method: bool ProcessLanguageBranchProperties(Dictionary<string, RawProperty> rawProperties);

- (initially I thought we might need language branch specific processing but it turned out there was no need for that method)

- the used Dictionary<string, RawProperty> rawProperties is just to avoid looking up properties multiple times from the ContentImportingEventArgs e e.TransferContentData.RawContentData.Property RawProperty array => read the array to a dictionary where the key is the property name and value is propertys RawProperty data

- the implementation is responsible to process the content

Import handler initialization module implementation

So create a new initialization module using the Episerver documentation: creating initialization module.

And in the initialize method get a reference to IDataImportEvents and to the ILanguageBranchRepository services. We store the ILanguageBranchRepository to the initialization modules private field for later use. So out Initialize method implementation looks like this:

public void Initialize(InitializationEngine context)

{

try

{

if (context.HostType == HostType.WebApplication)

{

var locator = context.Locate.Advanced;

// import events

var events = locator.GetInstance<IDataImportEvents>();

// language branch repository

_languageBranchRepository = locator.GetInstance<ILanguageBranchRepository>();

if (events != null && _languageBranchRepository != null)

{

// Register the processors

RegisterImportContentTypeProcessors();

if (_importContentTypeProcessors.Count == 0)

{

ContentMigrationLogger.Logger.Warning("There are no import content type processors registered. Disabling import migration handling.");

// pointless to register to import events if we have no processors

return;

}

// subscribe to import events

events.BlobImporting += EventBlobImporting;

events.BlobImported += EventBlobImported;

events.ContentImporting += EventContentImporting;

events.ContentImported += EventContentImported;

events.PropertyImporting += EventPropertyImporting;

events.Starting += EventImportStarting;

events.Completed += EventImportCompleted;

// we have registered events handlers which we should unregister in uninitialize

_eventsInitialized = true;

}

else

{

ContentMigrationLogger.Logger.Error($"Import migration handling not enabled because required services are not available. IDataImportEvents is null: {events == null}. ILanguageBranchRepository is null: {_languageBranchRepository == null}.");

}

}

}

catch (Exception ex)

{

ContentMigrationLogger.Logger.Error("There was an unexpected error during import handler initialization.", ex);

}

}NOTE! The method RegisterImportContentTypeProcessors() is in the initialization module and is used to register the IImportContentTypeProcessor services and stored to initialization modules private field for later use. ContentMigrationLogger is just our own static class that contains the shared logger for all import logging activities. The events BlobImported and ContentImported are only used to write debug messages about the content to be able to troubleshoot issue related to the content (mainly used when developing an implementation for IImportContentTypeProcessor and testing locally with content).

Blob import

So as I mentioned previously that blobs are the first thing the import processes, our blob importing handler looks like this:

private static readonly string[] _allowedFileExtensions = new string[] { "mp4", "jpg", "jpeg", "png", "svg", "pdf", "json" };

private void EventBlobImporting(EPiServer.Enterprise.Transfer.ITransferContext transferContext, FileImportingEventArgs e)

{

if (e == null)

{

ContentMigrationLogger.Logger.Error("EventBlobImporting called with null reference for FileImportingEventArgs.");

}

if (ContentMigrationLogger.IsDebugEnabled)

{

ContentMigrationLogger.Logger.Debug($"Importing blob, provider name '{e.ProviderName}', relative path '{e.ProviderRelativePath}' and permanent link virtual path '{e.PermanentLinkVirtualPath}'.");

}

// try to get the file extension

if (e.TryGetFileExtension(out string fileExtension))

{

// check is the extension allowed/supported

if (!_allowedFileExtensions.Contains(fileExtension, StringComparer.OrdinalIgnoreCase))

{

ContentMigrationLogger.Logger.Error($"Cancelling blob import because the file extension '{fileExtension}' is not allowed. Provider name '{e.ProviderName}', relative path '{e.ProviderRelativePath}' and permanent link virtual path '{e.PermanentLinkVirtualPath}'.");

e.Cancel = true;

}

}

else

{

// no file extension or it could not be resolved, cancel the blob import

ContentMigrationLogger.Logger.Error($"Cancelling blob import because the file extension could not be extracted from provider relative path value '{e.ProviderRelativePath}'.");

e.Cancel = true;

}

}And here are a snip from our extensions and helpers class for the used extension: TryGetFileExtension(out string fileExtension)

/// <summary>

/// Gets the file extension from a file name (or path and filename).

/// </summary>

/// <param name="fileName">file name or path and file name</param>

/// <param name="fileExtension">the file extension extracted from the <paramref name="fileName"/> without the dot, so result will be like: jpg or png etc.</param>

/// <returns>True if file extension was extracted from <paramref name="fileName"/> otherwise false</returns>

/// <remarks>

/// <para>

/// The extension is not normalized (nothing is done to capitalization), meaning if the extension is 'PnG' then that will be returned.

/// </para>

/// </remarks>

private static bool GetFileExtension(string fileName, out string fileExtension)

{

fileExtension = null;

if (!string.IsNullOrWhiteSpace(fileName))

{

try

{

string tmpFileExtension = Path.GetExtension(fileName);

if (!string.IsNullOrWhiteSpace(tmpFileExtension) && tmpFileExtension.Length >= 2)

{

// length check so that there is more than the dot

fileExtension = tmpFileExtension.Substring(1);

return true;

}

}

catch (Exception ex)

{

ContentMigrationLogger.Logger.Error($"Gettting file extension from filename '{fileName}' failed.", ex);

return false;

}

}

return false;

}

/// <summary>

/// Tries to get the file extension from FileImportingEventArgs property ProviderRelativePath value.

/// </summary>

/// <param name="args">Instance of <see cref="EPiServer.Enterprise.FileImportingEventArgs"/> or null</param>

/// <param name="fileExtension">Extracted file extension</param>

/// <returns>True if the file extension was extracted otherwise false</returns>

public static bool TryGetFileExtension(this EPiServer.Enterprise.FileImportingEventArgs args, out string fileExtension)

{

fileExtension = null;

if (args == null)

{

return false;

}

if (GetFileExtension(args.ProviderRelativePath, out fileExtension))

{

return true;

}

return false;

}Content import

Next step in the import process is to import the actual content and this is the place where we can do manipulation to the content and or cancel the import totally for the content. Episerver import process triggers the ContentImporting event per each content item that is about to be imported (so this event happens before the content item is actually created).

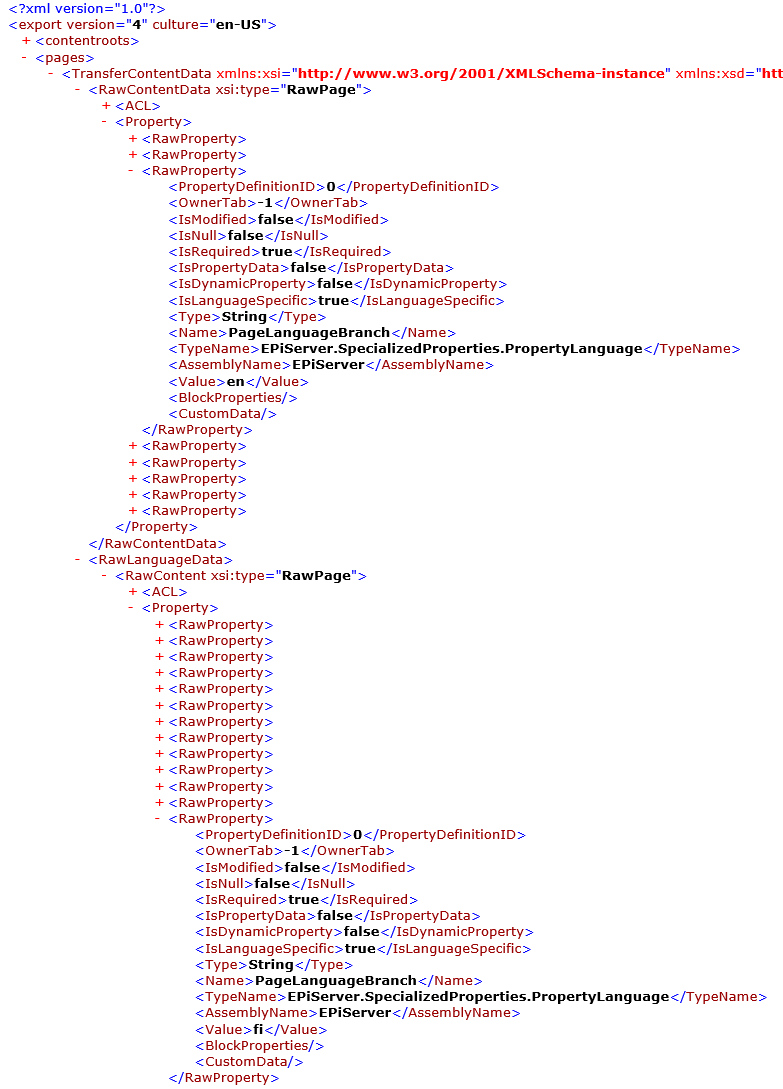

Here is a picture about the epix.xml file structure (the actual xml file was modified, entries removed so that I could squeeze the entries to the picture), showing that there is master language content in english and then there is language branch content in finnish:

So from the picture you can see the basic structure of the XML:

- pages element will contain TransferContentData element(s)

- TransferContentData is the single content item and its language versions (and other possible settings, like language fallback settings in ContentLanguageSettings element)

- if the RawContentData has type attribute with value RawPage, then the content is a page, otherwise its some other content type like: block, media, etc

- RawContentData holds the master content property values

- Language branch property values are in the RawLanguageData

- structure is the same for both the RawContentData and RawLanguageData

- so below those we have the ACL element that can hold access-control settings

- Property element holds all properties for the content

- there is a RawProperty per each property on the content type, and these hold all information about each property, like type, typename, is language specific, etc

- TransferContentData is the single content item and its language versions (and other possible settings, like language fallback settings in ContentLanguageSettings element)

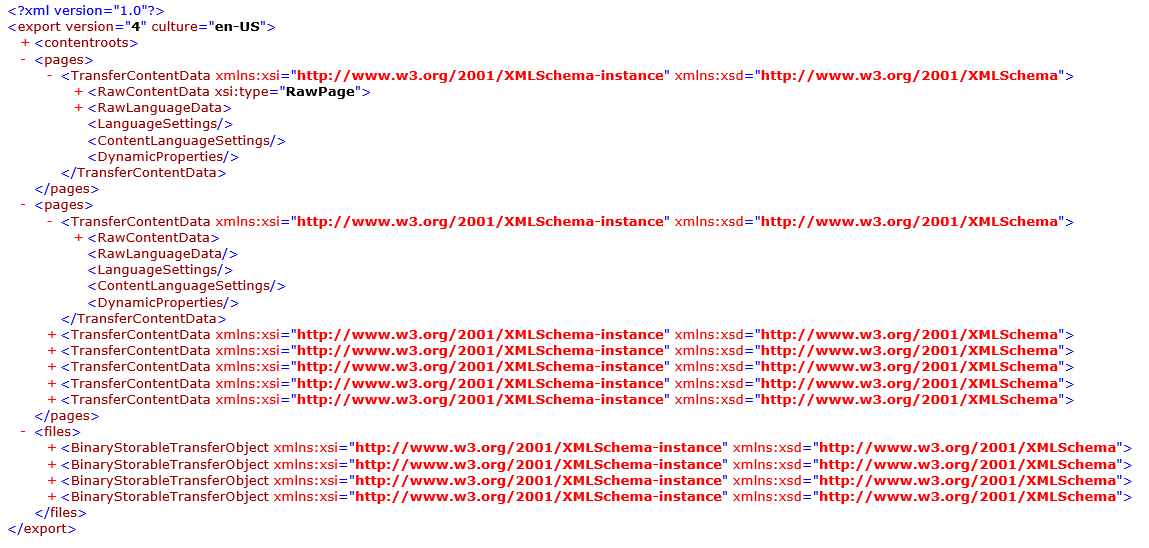

epix XML file high level structure:

So back to the importing event, this event is where we get the TransferContentData element with children nicely parsed to corresponding .Net framework objects. We can get the content from ContentImportingEventArgs TransferContentData property which is of type ITransferContentData and that interface defines the properties which will hold the data from the epix.xml file. So this is the place where "fun" starts with the data manipulation. Unfortunately I cannot share all the code we've writen for the custom import processing but I'll try to share enough information and code snips that might be enough to get you in good start in your own project.

For example in our content the source content had language fallbacks defined in sub structure of the website and we didn't want to bring in any of those settings to our target site, so we created a simple extension method that always removes that information from content:

/// <summary>

/// Removes all content language settings from the <see cref="ITransferContentData.ContentLanguageSettings"/>.

/// </summary>

/// <param name="transferContentData">Instance of <see cref="ITransferContentData"/> or null</param>

public static void RemoveContentLanguageSettings(this ITransferContentData transferContentData)

{

if (transferContentData != null && transferContentData.ContentLanguageSettings != null)

{

transferContentData.ContentLanguageSettings.Clear();

}

}

// transferContentData is gotten from the ContentImportingEventArgs e,

// var transferContentData = e?.TransferContentData;

// transferContentData.RemoveContentLanguageSettings();Then we also use an extension method with some overload extension methods which we can use to once load the RawProperty items to a dictionary where key is the property name and value is the original RawProperty instance. This way we get faster lookups for the properties we want to get instead enumerating the RawProperty[] containing the properties each time we need to do something about a property in the processing. Below is sample code for such extension:

/// <summary>

/// Reads the properties to a dictionary where key is the property name and value is the original RawProperty.

/// </summary>

/// <param name="rawProperties">Array of RawProperty instances</param>

/// <returns>Dictionary containing the original properties</returns>

/// <remarks>

/// <para>

/// NOTE! The RawProperty instances are not copied from the origin they still point to the original array

/// so any modifications are done to the original properties.

/// </para>

/// </remarks>

public static Dictionary<string, RawProperty> GetRawPropertiesAsDictionary(this RawProperty[] rawProperties)

{

if (rawProperties == null || rawProperties.Length == 0)

{

return new Dictionary<string, RawProperty>(0);

}

var dict = new Dictionary<string, RawProperty>(rawProperties.Length);

foreach (var property in rawProperties)

{

// if there is a null value in the array then skip it

if (property == null)

{

continue;

}

dict.Add(property.Name, property);

}

return dict;

}NOTE! As said in the above code comments, the dictionary value objects are still pointing to the same original objects held in the source array, so any changes you make using the dictionary are actually done to the source array objects too, keep this in mind! In our implementation the idea is not to care about the changes to the original array as we will always overwrite the original array objects in our code by calling a Save extension method like this:

/// <summary>

/// Writes the <paramref name="rawProperties"/> dictonary 'Values' property to <paramref name="content"/> instances 'Property' field.

/// </summary>

/// <param name="content">Instance of RawContent</param>

/// <param name="rawProperties">Dictionary containing the raw properties to be writen to the <paramref name="content"/> instances Property field.</param>

/// <exception cref="ArgumentNullException"><paramref name="content"/> is null</exception>

/// <remarks>

/// <para>

/// You need to call this method so that all your changes like removing property gets persisted to the RawContent (<paramref name="content"/>) instances Property field.

/// </para>

/// <para>

/// If the <paramref name="rawProperties"/> is null or has no entries then nothing is done. If your intention is to remove all properties then you need to do it other way.

/// </para>

/// </remarks>

public static void Save(this RawContent content, Dictionary<string, RawProperty> rawProperties)

{

if (content == null)

{

throw new ArgumentNullException(nameof(content));

}

if (rawProperties.HasEntries())

{

content.Property = rawProperties.Values.ToArray();

}

}And we have similiar Save method for block properties (cases where a local block is used aka Block as a property).

Here is a link to a gist containing some extension methods to get Episerver known properties from the "rawproperties" dictionary: https://gist.github.com/alasvant/c446b68cda607ab734110af73aef317b

Import flow in our implementation

So instead copy pasting all of our projects code here, instead I will describe our flow:

- remove the previously mentioned content language settings

- parse the content propeties to dictionary

- get the content 'content type id' property value from dictionary

- if there is no content type id, do nothing about the content (let Episerver import decide what to do about it)

- find a content type processor using the content type id from the registered content type processors

- note, in our implementation a content type processor can handle a single or many content types, that's why it has the CanHandle(string) method defined in the interface

- first processor saying it can handle the content is used

- if not content type processor is found, we check the content master language that it is enabled in the system

- if the master language is not enabled, we cancel the import for the content as the Episerver import would otherwise error on the content and break the import process

- if a processor was found, we call its ProcessMasterLanguageProperties method with the master language properties

- if the processor returns true, so the content was processed without errors, we save the modified properties to RawContentData property (event argument e.TransferContentData.RawContentData)

- and then we have code that loops the e.TransferContentData.RawLanguageData (list containing the language branches)

- check that the language is enabled in the system, if not - add the language to "to be removed list"

- pass the parsed language properties to ProcessLanguageBranchProperties method in the processor

- if the method returns true, save the properties to the original object (RawContent.Property whihc is RawProperty array, so we basically call for the dictionary: content.Property = rawProperties.Values.ToArray();)

- if the method returns false, then add the language to "to be removed list"

- finally, if there are any entries in the "to be removed list", we remove those languages from the original list

- and then we have code that loops the e.TransferContentData.RawLanguageData (list containing the language branches)

- if the processor returns true, so the content was processed without errors, we save the modified properties to RawContentData property (event argument e.TransferContentData.RawContentData)

Content manipulation

So what can we do about the content:

- we can cancel the content import by setting the e.Cancel = true, in the event handler for the ContentImportingEventArgs instance

- we can change the content type id

- for example, let's say that you have copied (like copy paste source code) from site A to site B, but you have changed the content type id and the class name and now you would like to import some instance from site A to site B

- if all the properties are still the same, it is enough just to change the content type id value in the import to match the target system content id

- we can remove properties, simply in the code remove the property from the rawDictionary (referring our processing implementation)

- we can rename properties, simply get the property using the source property name and change the referenced RawProperty objects property name

- we can convert property types (with some limitations, you need to be able to write the code to do the conversion)

- for example in our case, we had used Episerver Url type for a property and in the destination we had changed it to ContentReference as the properties were used for example for image so there was no need for it to be Url

- so we have created a helper method which tries to convert source Url to a ContentReference

- the source value is like this in the RawProperty value (string): ~/link/653ebc1fa8184dbdbab2023e3998ce28.aspx

- code extracts the "guid" string from the source, so it grabs the value: 653ebc1fa8184dbdbab2023e3998ce28

- and then creates a new string with the following format: [653ebc1fa8184dbdbab2023e3998ce28][][]

- thats how ContentReference is serialized to the epix.xml, so we a re just mimicin that, NOTE! There can be also language information but we are leaving those empty in our case

- so we have created a helper method which tries to convert source Url to a ContentReference

- for example in our case, we had used Episerver Url type for a property and in the destination we had changed it to ContentReference as the properties were used for example for image so there was no need for it to be Url

- we can re-map the content to a totally different content type

- so basically change the content type id

- rename properties

- remove properties

- add new properties and copy values to it from multiple properties

- for example when target uses a local block (block as a property) and the source have the values in multiple properties

- you can change the contents publishing status

- for example in our code we have blocks published but pages are changed to draft

- you could add new language branches in your code

- you could change content master language

- for example if all content should be created in english and your source content has content created in Finnish as the master language and there is English language branch, then in your code you could switch the master language properties vice versa with tha language branch

What we can't do?

- add totally new content that doesn't exist in the import data, meaning you just can't create a new additional page for example, because everything is based on the import data

- well you might be able to do some hacks like storing information about some content during the import process and after import have something else be triggered to create the extra content

Property import

Last step in the import is the PropertyImporting event which we basically used only for debug logging in development (property information, what is about to be imported).

Closing words

This time a bit less code than usually in my posts but I simply can't copy paste the code from the project :D Hopefully this post though gives you some ideas if you find your self in a situation that you need to find a way how migrate a lot of content from site or sites to another site - you might maybe be able to import some if not all content using a custom import processing implementation and cut the costs in the migration project.

I make no promise but I might later add a new post with a working sample code with two Alloy sites - but as we all know that Episerver .Net Core is coming, so depending when it is officially released might change my priorities :D

Please feel free to ask questions in the comments section if something was left unclear or needs more information and I will try to answer.

Thanks for well detailed post Antti! We also encounter the same issue when migrate from old page type to new one. Using convert feature from Admin UI is OK, but sometimes if the page contains error (missing or invalid files), it will stop exporting, which seems bothersome. From programmatically view I think using code is easier to handle issue. I can see you're using thumbnail image also. Happy blogging and hope to see your next post here ;).