Media files tagging, post-analyzing for your better search function

Why?

I guess, most developers who use Episerver Find did run into HTTP 413 when indexing media files. If you have a closer look into indexed documents, there's a "SearchAttchment" field which consists of Base-64 content of the whole file. Somewhere under the hood, that Base-64 string will be processed to make search queries more efficient, aka more relevant items.

It's quite "extra" work since it doesn't sound good for high-res media, or, merely a huge PDF doc.

Azure CV API

Computer Vision API is a part of Azure Cognitive Services. The most important, for most devs, it offers free tier with a generous amount of 5,000 request/mo. (See pricing)

Integration is down to earth easy since all transactions are made through HTTP.

How?

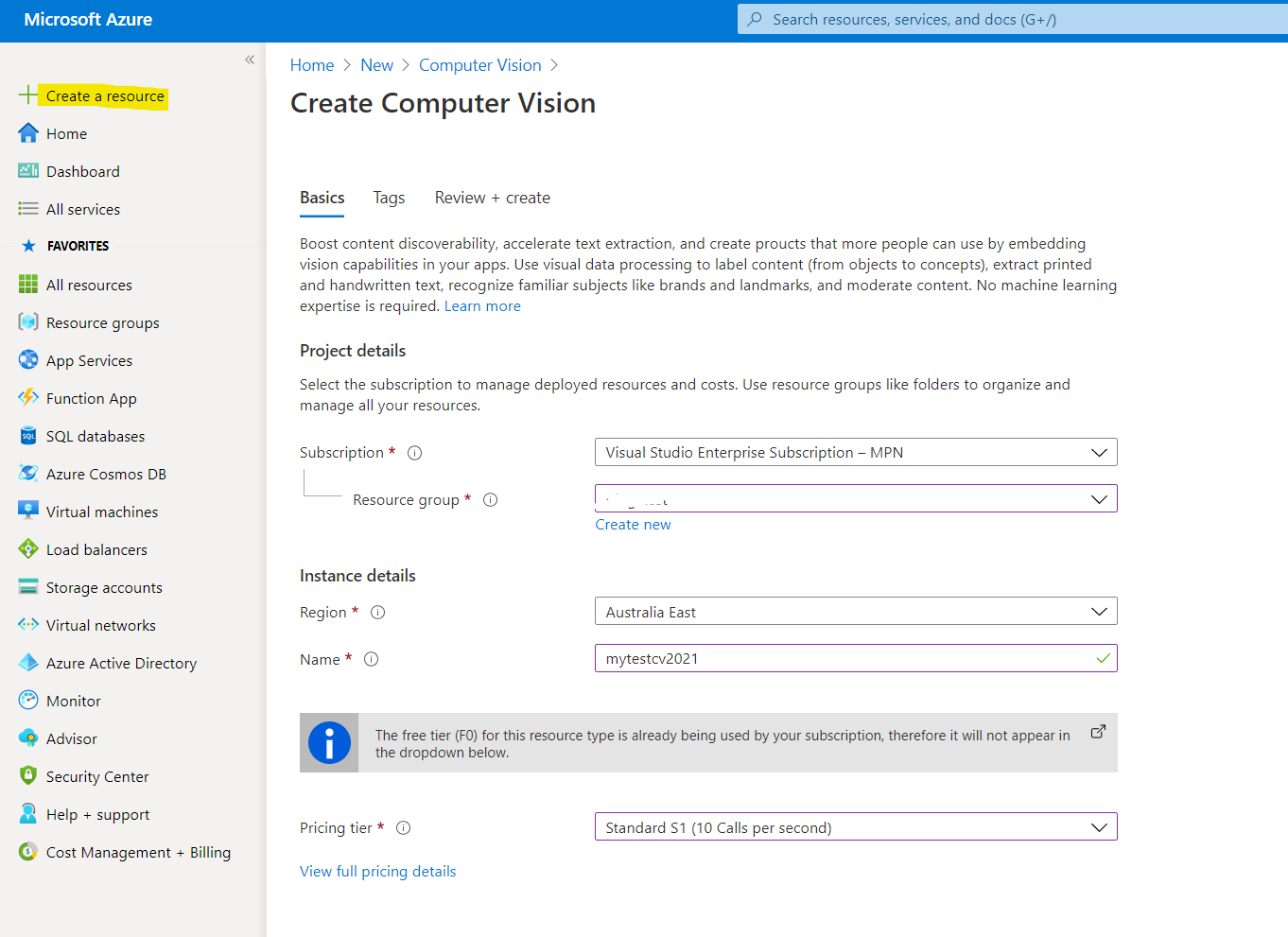

1. Create Azure Portal account and resource

You need an Azure account. From Home page, click "Create a resource" > Search for "Computer Vision" > Follow steps (if you don't have any resource group, create one, it won't bite)

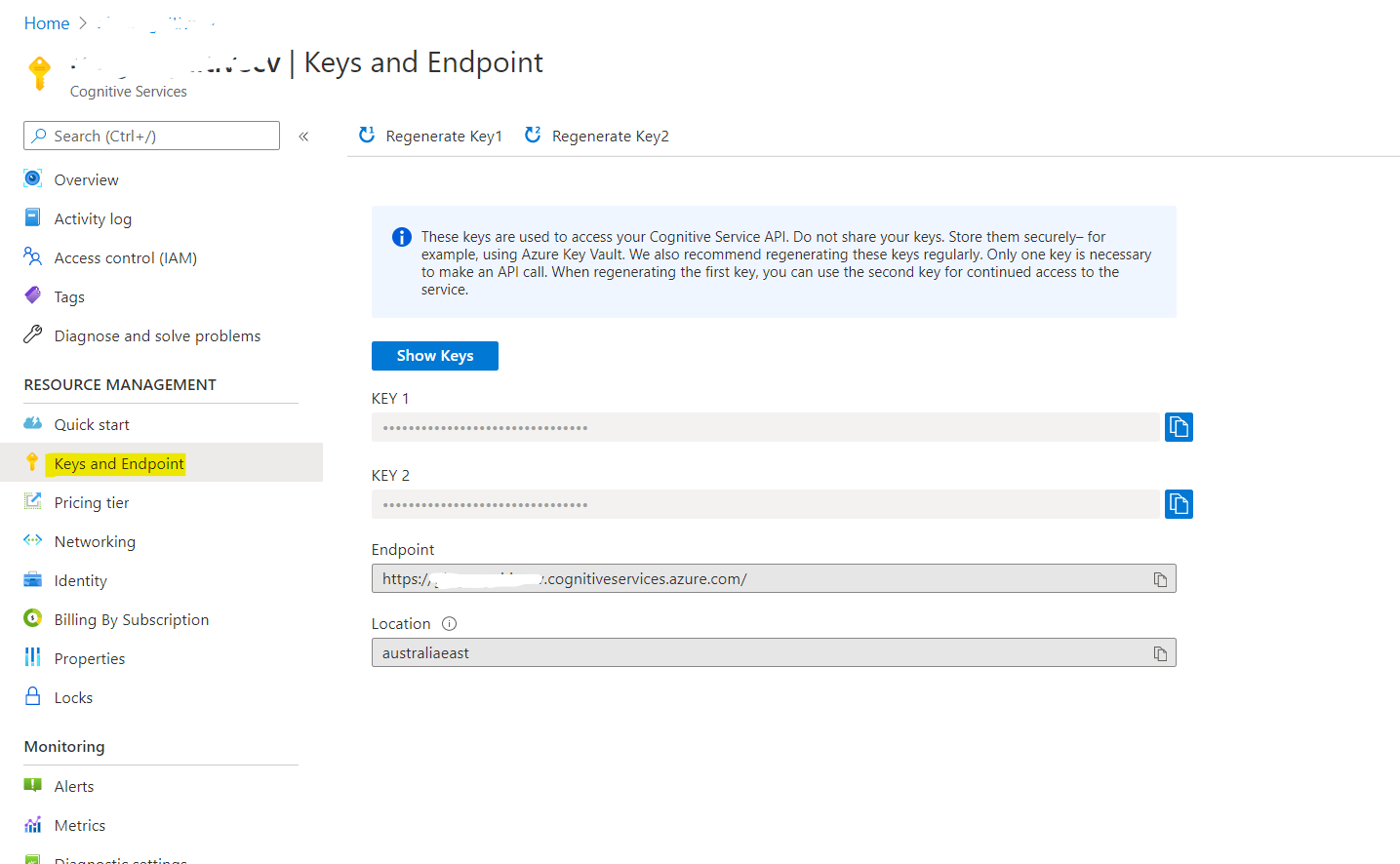

After creation, grab your API credentials.

2. Say hi to the service

MSFT provides a great test page: https://westcentralus.dev.cognitive.microsoft.com/docs/services/computer-vision-v3-ga/operations/56f91f2e778daf14a499f21b

You can play around to get to know what's required to do, options, etc to make use of Azure CV.

3. Time to integrate with CMS

I'll demonstrate with the sample Alloy CMS site, MVC scheme. Hereby, I'll only give a decent integration for tagging and describing ImageData.

Find a good place to store API credential

For example, you can store configs in web.config <appSettings> section. It will be super easy to maintain and configure when deploy to hosting, like DXC.

<configuration>

<appSettings>

<add key="COMPUTER_VISION_SUBSCRIPTION_KEY" value="*******" />

<add key="COMPUTER_VISION_ENDPOINT" value="https://******.cognitiveservices.azure.com/" />

</appSettings>Set-ups

Create a new GroupDefinition to make our editor UI neat.

// Add to Global.GroupNames

[Display(Order = 10)]

public const string CvImageData = "Computer Vision Data";Add new field to the ImageData model.

// Models.Media.ImageFile

[Display(

Name = "Analyzed",

Description = "Uncheck this to force analyzing again",

GroupName = Global.GroupNames.CvImageData,

Order = 10)]

public virtual bool CvIsAnalyzed { get; set; }

[Display(

Name = "Tags",

Description = "",

GroupName = Global.GroupNames.CvImageData,

Order = 20)]

public virtual string CvTags { get; set; }

[Display(

Name = "Describe",

Description = "Auto-generated description of this image",

GroupName = Global.GroupNames.CvImageData,

Order = 30)]

[UIHint(UIHint.Textarea)]

public virtual string CvDescribe { get; set; }

[Display(

Name = "Accent color",

Description = "The dominant hue in the image",

GroupName = Global.GroupNames.CvImageData,

Order = 40)]

public virtual string CvAccentColor { get; set; }

[Display(

Name = "Raw data",

Description = "Response from Cognitive Service",

GroupName = Global.GroupNames.CvImageData,

Order = 100)]

[ReadOnly(true)]

[UIHint(UIHint.Textarea)]

public virtual string CvData { get; set; }Integrate

My plan is to use ContentEvents to analyze images by sending it to Azure CV service. To prevent excessive usage, the field CvIsAnalyzed controls if an image needs to be analyzed or not.

First of all, construct a new InitializationModule:

[InitializableModule]

[ModuleDependency(typeof(EPiServer.Web.InitializationModule))]

public class ImageCognitiveInitializationModule : IInitializableModule

{

private static Injected<IContentEvents> _contentSvc;

private static Injected<IContentRepository> _repoSvc;

private static Injected<ILogger> _logSvc;

// Load configurations from web.config

static string subscriptionKey = ConfigurationManager.AppSettings["COMPUTER_VISION_SUBSCRIPTION_KEY"];

static string endpoint = ConfigurationManager.AppSettings["COMPUTER_VISION_ENDPOINT"];

public void Initialize(InitializationEngine context)

{

var events = _contentSvc.Service;

events.SavedContent += Events_CreatedContent;

events.PublishedContent += Events_CreatedContent;

}

private void Events_CreatedContent(object sender, EPiServer.ContentEventArgs e)

{

// Todo: Analyze and update ImageData

}

}Analyze image with Azure CV API

The service requires you to POST an HttpRequest to URI [api_endpoint]/vision/v3.1/analyze with a bearer token in header "Ocp-Apim-Subscription-Key". The POST content is an octet-stream.

If you're on an non-DXC environment or using an external storage/CDN, make sure the server allows bufferring and chunking requests.

This is good enough for the request:

string uriBase = "vision/v3.1/analyze"; // Prevent magic string

HttpClient client = new HttpClient();

client.BaseAddress = new Uri(new Uri(endpoint), uriBase);

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", subscriptionKey);

HttpResponseMessage response;

byte[] byteData = GetImageAsByteArray(img);

using (ByteArrayContent content = new ByteArrayContent(byteData))

{

content.Headers.ContentType = new MediaTypeHeaderValue("application/octet-stream");

// Make it synchronize, or fire-and-forget approach goes here

response = client.PostAsync("?visualFeatures=Description,Tags,Color&language=en", content).GetAwaiter().GetResult();

}

string contentString = response.Content.ReadAsStringAsync().GetAwaiter().GetResult();With default implementation (local files in ~\App_Data\blob or Azure Blobs), it's pretty easy to get a byte array of the image:

private byte[] GetImageAsByteArray(ImageFile img)

{

using (Stream responseStream = img.BinaryData.OpenRead())

{

MemoryStream ms = new MemoryStream();

responseStream.CopyTo(ms);

ms.Seek(0, SeekOrigin.Begin);

BinaryReader binaryReader = new BinaryReader(ms);

return binaryReader.ReadBytes((int)ms.Length);

}

}All you need is deserialize the JSON in contentString variable and put it in the custom fields of your model. Don't forget:

- Make CvIsAnalyzed = true

- Use Patch to prevent creation of a nonsense content version

_repoSvc.Service.Save(img, SaveAction.Patch, AccessLevel.NoAccess); - Do try-catch aggresively when reading info from JSON string

Polishing

You'll need to control the pre-condition if an HttpRequest to Azure is needed. Or else, it cost you money.

var img = e.Content as ImageFile;

if (img == null || img.CvIsAnalyzed) return;It may cause issue if you have a complex roles and restrictions, depends on your requirements, you can do this so everyone could re-analyze the image:

PrincipalInfo.CurrentPrincipal = new GenericPrincipal(new GenericIdentity("[something meaningful so you would know it's your automated task]"),

new[] { "Administrators" });Checking up

Upload an image:

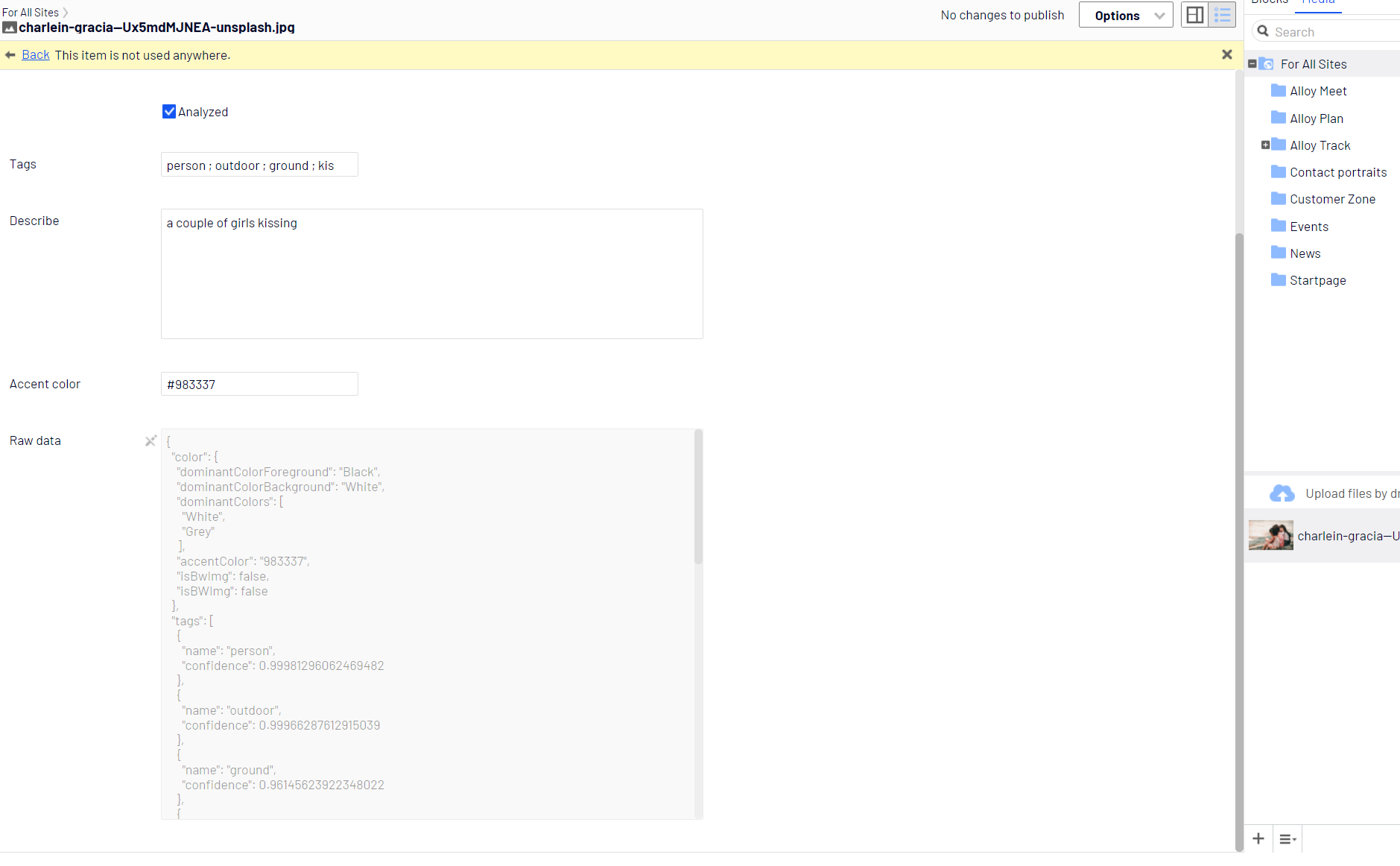

Then check up the analyzed data. Voilà!

So what?

Use the analyzed data for more accurate search!

Imagine, you're creating a website that sells stock photos. The search function will be awesome with tagged and well-analyzed image data.

Feel free to get rid of "SearchAttachment" field, good bye HTTP 413 when indexing media files!

public class FilterConfig : IInitializableModule

{

private Injected<IClient> findClientSvc;

public void Initialize(InitializationEngine context)

{

findClientSvc.Service

.Conventions

.ForInstancesOf<EPiServer.Core.ImageData>()

.ExcludeField(x => x.SearchAttachment())

.ExcludeField(x => x.SearchAttachmentText());

}

}You can do the same thing to analyze PDFs, MS Office document with the same approach.

Afterwords

It's mere an example and demonstration, and obviously this is out-of-the-box implementation. Please use it on your own risk if you're planning to add those code below to your site. The content is made up by machines, so, it sometimes might be inappropriate for your site (look closely to the previous image 😅).

Plus, you may have a different idea than mine. If so, please feel free leave a comment below, sharing is caring :)

I've created a nuget package that can help you generate metadata using Azure Computer Vision. Also demonstrated at Episerver Developer Happy Hour in 2020.

Nuget: https://nuget.episerver.com/package/?id=Gulla.Episerver.AutomaticImageDescription

Blog post: https://www.gulla.net/no/blog/episerver-automatic-image-metadata/