The A/A Test: What You Need to Know

Sure, we all know what an A/B test can do. But what is an A/A test? How is it different?

With an A/B test, we know that we can take a webpage (our “control”), create a variation of that page (the new “variant”), and deliver those two distinct experiences to our website visitors in order to determine the better performer. Also known as split-testing, the A/B test process compares the performance of the original versus the variant, according to a set list of metrics, to learn which of the two produced better results.

Thanks to experimentation platforms, such as Optimizely Web Experimentation, many of us also recognize the undeniable value of being able to test two variations of a web page without any alterations to back-end code. Talk about speed-to-value!

So then, what is an A/A test? And why do we need to incorporate this type of “test” into the planning and strategy phases of our experimentation program?

What is an A/A test?

The A/A test comes before all other tests on the experimentation platform to essentially serve as the practice run of your experimentation program. Unlike an A/B test, the A/A test does not create a variation from the original webpage. Instead, the A/A model tests the original webpage against a copy of itself in order to check for any issues that could potentially arise before an actual test is conducted on the platform.

So, while an A/B test compares two different versions of a webpage (no matter how minor the difference between the two); an A/A test compares two versions of a webpage that are exactly the same. There is no difference between the control or the variant in an A/A test (Version A = Version A). You are basically testing a webpage against itself!

Of course, that begs the question...

Why is an A/A Test Useful?

The purpose of the A/A test is primarily two-fold:

- Platform-level: To test the functionality of the web experimentation platform

- Experiment-level: To test the validity of data capture and test setup

An A/A test, therefore, gives you assurance that your platform is ready to conduct your experimentation program before it is put into widespread use.

When to Use an A/A test

The A/A test should, therefore, be the first test that you launch immediately following the implementation of the Optimizely Javascript tag on the website.

Note: If you have multiple websites and/or use single-page application (SPA) web pages, you should perform an A/A test whenever the tag has been newly implemented into the header code, even if using the same tag across all experiences.

How to Set Up an A/A Test

Once the tag has been installed, you can begin your A/A test setup. Similiar to how you would build an A/B test, the creation of an A/A test starts by clicking onto the Create New Experiment button in the top-right corner of Optimizely Experimentation and then selecting A/B Test from the dropdown menu. (Yes, A/B; but our B will remain our A for this test.)

The setup of an A/A test is fairly simple and quick, yet should take into account the primary and secondary metrics that should be included in this type of test.

A/A Test Metrics

While it may be tempting to include as many metrics as possible, refrain from doing so! Remember that Optimizely recommends no more than five total metrics per test to avoid test lag: the primary metric plus 2-4 secondary metrics.

The metrics in an A/A test are meant to look for any noticeable differences between the data that was captured from both webpages. In other words, the test lets you compare the Control A results against the Variant A results to be sure that the data is comparatively the same.

Page Metrics

Page-level metrics, such as pageview and bounce rate are generally included in an A/A test as these metrics capture basic yet important data points. The data collected from these metrics can help you quickly check to see if your website is sending data to Optimizely Web Experimentation via the Javascript code.

If no pageviews are recorded in the results of an A/A test, then we immediately know that there is an issue to address.

Note: To troubleshoot this issue, double-check the implementation of the Optimizely tag to ensure that it appears in the header code of your website.

Click & Custom metrics

Metrics that track specific user actions may also be helpful to add to your A/A test. These metrics might include a click event and/or a transactional event that can let you know if a specific user action has been captured correctly by the experimentation platform.

Depending on your website, some of these events may also be custom-developed, which gives further reason to include them your A/A test in order to ensure that these custom events have been developed to provide the type of data that you expect to see.

As a general rule of thumb, you will want to use metrics that would likely appear in most tests of your experimentation program. Add the metrics that would make the most sense to include: those that can help you validate the functionality of your experimentation platforms and the accuracy of the metrics that will collect data for your planned tests.

Need help deciding? Read: "How to Determine Which Test Metrics to Select"

What Should I Look for in an A/A Test?

The ideal outcome of an A/A test is to see similar results between the two same versions of the webpage. Since an A/A test compares the metrics of two identical webpages against each other, the expectation is that there will be no difference in the metric values between them.

A successful A/A test would show nearly the same results between the Control (A) and the "Variant" (A).

While the collected results may not be exactly the same, any differences between the results of Control A and those of Variant A should be very slight. If any major differences are seen in the A/A test results for any metric, then we know that should take a closer look at the setup of that metric. (Again, if the pageviews are simply not recording in the results of the platforms, then our first step would be to check the installation of the tag on the website.)

Zero Statistical Significance

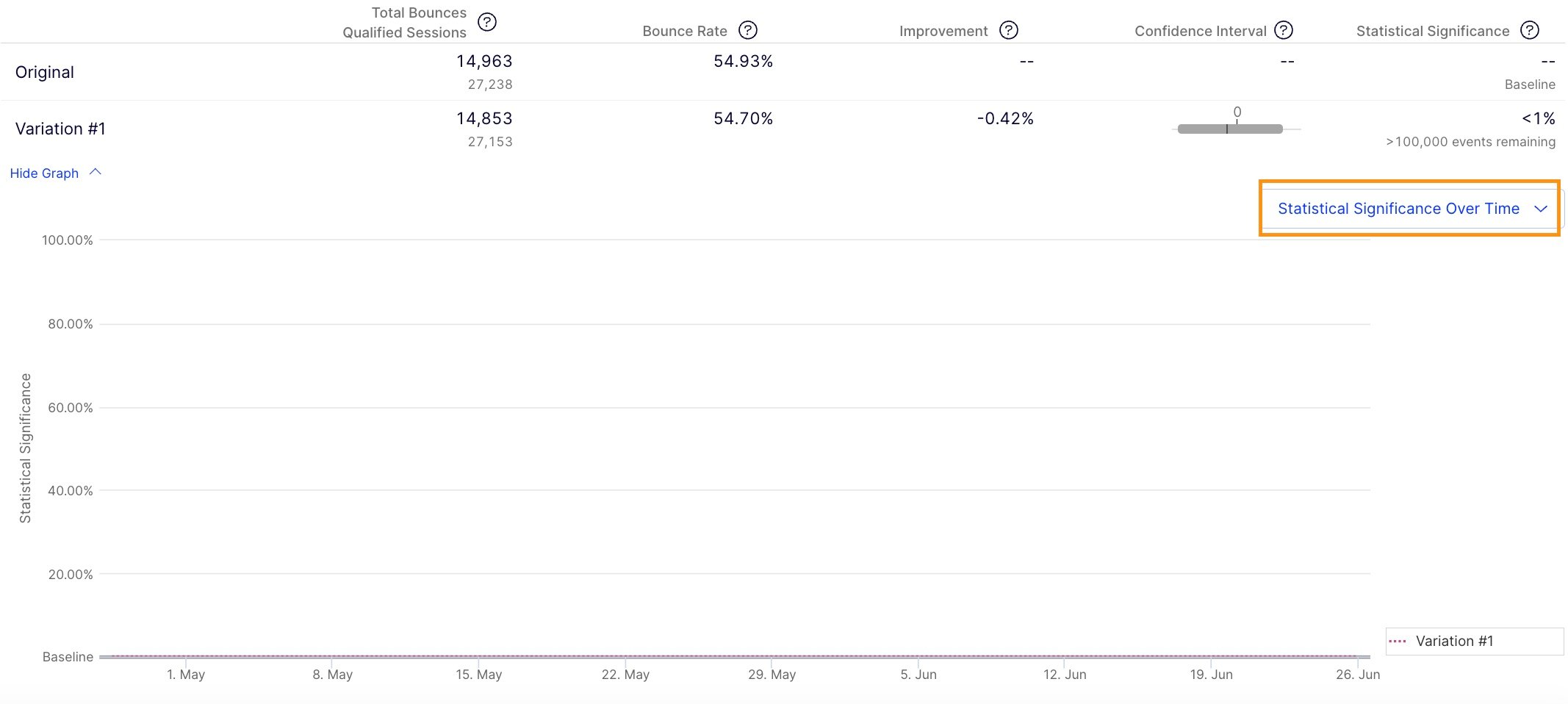

Similar results between the two experiences should also prevent any metric from reaching statistical significance. Therefore, statistical significance serves as a helpful guide when we check the results of an A/A test.

Ideally, we are looking for the statistical significance to "flat line" for all metrics throughout the duration of an A/A test. If statistical significance spikes, then we can flag an issue to address.

Tip: Use the below graph view in Optimizely Web Experimetation to look for any upticks in statistical significance for any of your A/A test metrics.

A/A Test Use Cases

Moreso a useful practice than a “test” itself, the A/A test is a practical step to include in your experimentation program for several reasons. For example, you can use this test to:

- Confirm the functionality of your experimentation platform

- Determine the feasibility of performing a test on a specific web page

- Validate the accuracy of the software’s performance metrics

- Check the tracking and analytics of your primary and secondary metrics

- Obtain baseline data for these metrics that can be used to forecast targets

Or, of course, all of the above! As you can see, the A/A test not only verifies the readiness of your software but lets you spot-check the setup of individual tests, as well. Use this type of test as your “dry run” before your first A/B or multivariate test to gain the peace of mind you need to successfully launch your experimentation program!

Comments