We Cloned Our Best Analyst with AI: How Our Opal Hackathon Grand Prize Winner is Changing Experimentation

Every experimentation team knows the feeling. You have a backlog of experiment ideas, but progress is bottlenecked by one critical team member, the Analyst.

Our analyst is the hero of our experimentation programmes. She’s the one who dives into Google Analytics, digs for data and runs the complex calculations for every single test idea. Sample size, baseline metrics, and test duration. It all flows through her. But she’s only one person. The process is slow, repetitive and a classic bottleneck. I can’t count the number of times I’ve jokingly said to her:

“I wish I could clone you!”

That offhand comment sparked an idea. During a brainstorming session with Niteco's AI R&D department for the Optimizely Opal Hackathon in New York, we asked ourselves: what would be the biggest-impact solution we could build with AI? I immediately thought: what if we could clone our analyst? What if we could build an AI version of her, an “Experimentation Strategist AI Worker” that could handle the soul-crushing, repetitive work, freeing us up to focus on strategy and insights?

That idea didn’t just get us excited. It won us the Grand Prize at the Opal Hackathon in New York.

![]()

I want to take you behind the scenes of our award-winning solution and show you how we’re using Optimizely Opal to build an AI teammate for every experimentation team.

Meet your new AI teammate: The Experimentation Strategist AI Worker

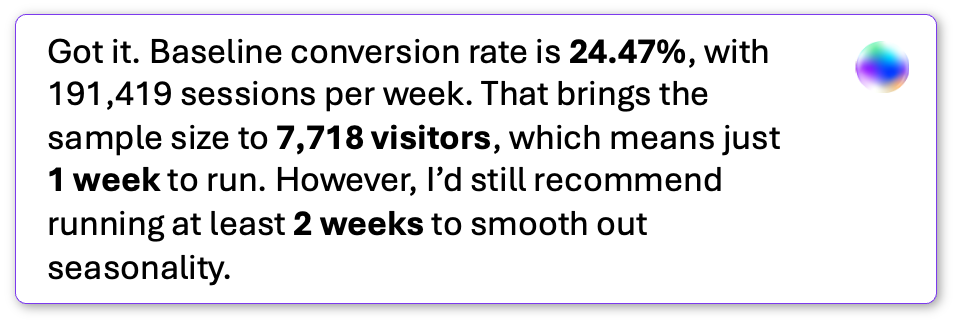

At its heart, our solution (which we affectionately call the “Sample Size Genie”) is a specialised AI Worker built natively on Optimizely Opal. It’s not a standalone tool or a fancy chatbot; it’s a fully orchestrated, multi-agent workflow that automates the entire experiment planning process.

It’s designed for anyone on the team, regardless of their technical skill. You simply talk to it in plain English.

You can say: "Calculate the sample size for a test on our product detail pages, focusing on desktop traffic in the US market."

Instantly, our AI Worker gets to work. It connects to your GA4 property or multiple properties, identifies all the pages that match the “product detail page” pattern, pulls the relevant traffic and baseline conversion metrics, applies the segmentation you asked for, and comes back in seconds with the sample size, estimated test duration and a recommendation on the test’s feasibility.

No more spreadsheets. No more waiting for days. Just answers.

How it works (without getting too technical)

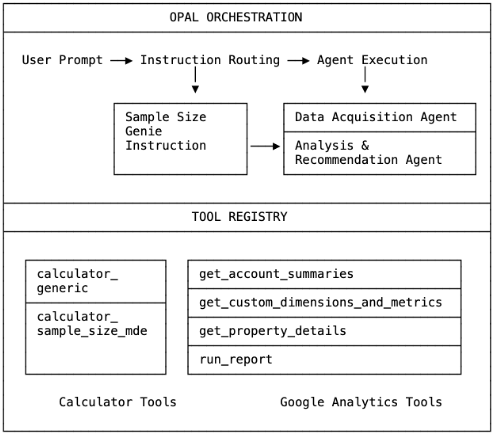

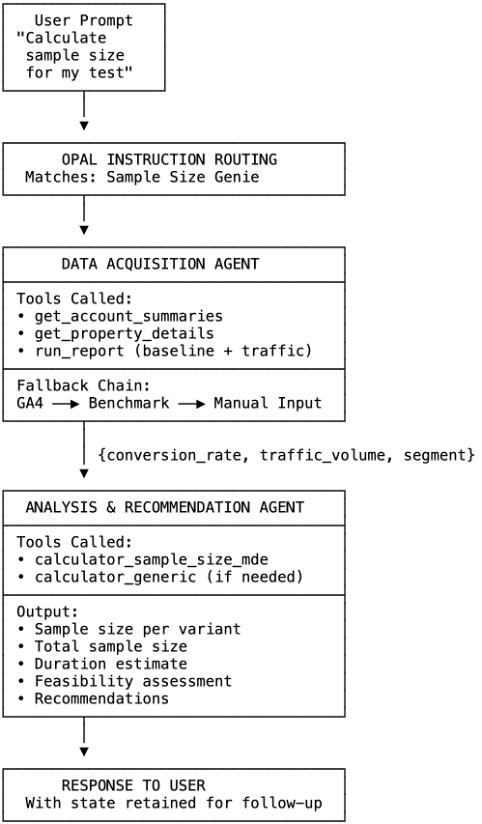

The magic lies in how we used Opal’s native architecture. We didn’t just write a clever prompt; we built a collaborative team of two specialised AI agents that work together seamlessly.

1. The Data Acquisition Agent

This agent is the researcher. Its job is to understand your request, connect to the right data source (such as GA4), retrieve the exact metrics you need and structure the information. If it can’t connect to GA4 or if you’re testing a brand-new feature with no historical data, it’s smart enough to fall back on industry benchmarks (such as Dynamic Yields published benchmarks) or even ask you for manual input.

2. The Analysis & Recommendation Agent

This agent is the strategist. It takes the structured data from the first agent and gets to work. It applies the correct statistical formulas, calculates the sample size and test duration and most importantly, assesses the viability of the experiment. If the traffic is too low to yield meaningful results, it won’t just say "no". It will recommend alternatives, like expanding the target audience or adjusting the expected uplift.

These two agents collaborate through Opal’s Orchestration Layer, passing data and context back and forth and using a shared Tool Registry to invoke specific functions, such as a calculator or the GA4 Data API. It’s a true AI team, working in perfect sync.

Technical diagram

Workflow details

Solving FOUR problems in one go

What impressed the judges at the hackathon was how this single solution solves four common experimentation headaches:

- Direct GA4 integration: get sample sizes based on real, live data in seconds.

- Limited traffic segments: receive specialist recommendations when testing on niche audiences.

- Industry benchmarks: plan tests for new features with confidence, even without historical data.

- Manual fallback: keep moving forward even if a data connection fails.

It is a game-changer

This isn't about replacing analysts. It’s about augmenting them. By automating the 80% of their job that is repetitive calculation, we free them up to focus on the 20% that requires human touch: imagination, deep analysis, strategic thinking and nurturing a culture of experimentation.

We're already building the next evolution of this AI Worker - one that can create many test ideas, generate multiple design variations directly from Figma AI, hold a dialogue with users just like they would with a UX designer about variant designs, and then build the variant code and insert it directly in Optimizely Web Experimentation. Imagine going from nothing to a fully built experimentation roadmap with no analyst, no designer or developer bottleneck.

That’s the future of experimentation: a world where Opal empowers our creativity and every team has an AI teammate to help them move faster, test smarter, and deliver better results.

We're planning to give away this and other AI Workers for free, even to companies that aren't Niteco's clients. This is so everyone in the Optimizely ecosystem can benefit and grow. As they say, “A rising tide lifts all boats”. By we, I mean the OMVPs at Niteco and by ‘free’ I mean the solution itself will be free, but of course, to use it, you will need to have your own Opal credits. I just want to clarify it.

If you are interested in finding out more, reach out to me on LinkedIn.

Comments