A way of working in a multi-developer team with Episerver DXC-Service

There are probably as many ways of collaborating on Episerver projects, as there are teams out there. This is an approach that I have been using in my teams for quite a while now, and it has served us well.

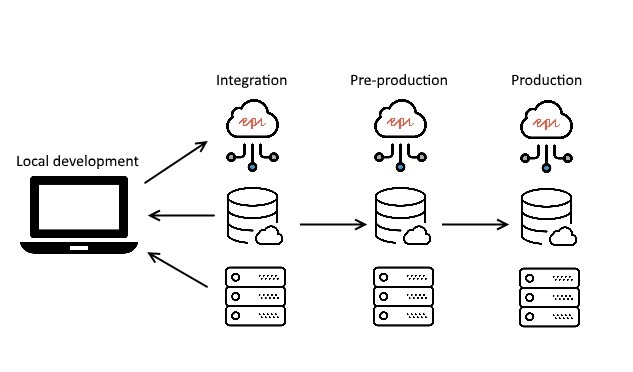

When working with Episerver DXC-Service, you get three environments, Integration, Pre-production, and Production, and you get access to deploying code into the Integration environment (hence its name). From there you either use Episerver Support, or the PaaS portal, to deploy changes from Integration to Pre-production, and then from Pre-production to Production by requesting a scheduled deploy by Episerver support.

This blog post attepts to explain how a team of developers can set up their project, so that changes can be integrated, and synchronized between developer machines, with minimal effort and setup. Minimal is of course relative, so I'll try to explain my reasoningas I go along.

In my experience, working with a shared database is a nightmare. It was workable in Episerver 6, but in projects using a code-first approach, it quickly became unfeasable. In the teams I have been working in for the past 5 years, we have had a shared "Development Master" environment, and used scripts to duplicate the database and blob files from that environment to local development machines. This approach has served us well, since it leaves us free to work remotely, isolated, and minimizes the risk of a single developer messing things up for the other team members when experimenting or doing YSOD-driven development.

In a DXC-Service project, we use the Integration environment as a "Development Master", and it is kept as lightweight as possible, meaning that it only contains enough content to to test functionality and configure features. This ensures that it is a quick process to synchronize to the local environment. The process isn't fool proof, and can leave some things to be desired, especially when working with feature branches, but it works in most cases.

The process itself is one part of the job, another part is making it simple to repeat the process. It can surely be done using visual tools, and pointing and clicking, but that would take forever, and we want this to be fast, and we want to be able to do it several times a day, if needed. In order to keep things secure, simple, and repeatable, there are a few prerequisites.

- I use IIS Express, because that removes the need for a License.config file to be present in my solution.

- I use SQL Server Integrated Security, because that removes the need for stored credentials in my web.config.

- I use a local SQL Server instance, because that allows me to work disconnected and remotely.

Provided that these prerequisites are in place, we can do a few things with Powershell. I'm no powershell guru, so consider this a hack-warning. I have created a script that presents a list of options, to synchronize the database, blobs, or both. My script relies on a file called Environment.ps1, a file that is excluded from source control, and contains all the sensitive information required for these tasks. It uses the Sql Server Dac Framework and AzCopy tools to performs the heavy lifting. Environment.ps1 looks something like this.

[CmdletBinding()]

param()

# SqlPackage is part of the Microsoft® SQL Server® Data-Tier Application Framework (DacFramework) which installed with SSMS or with SQL Server Data Tools, and can be found here https://www.microsoft.com/en-us/download/details.aspx?id=56356

$SqlPackagePath = "C:\Program Files (x86)\Microsoft SQL Server\140\DAC\bin\SqlPackage.exe"

# AzCopy is a command-line utility designed for copying data to/from Microsoft Azure Blob, File, and Table storage, and can be found here: http://aka.ms/downloadazcopy

$AzCopyPath = "C:\Program Files (x86)\Microsoft SDKs\Azure\AzCopy\AzCopy.exe"

# The name of the Web Application directory

$ApplicationDir = "<Name of directory and Web project csproj-file>"

# The name of the Web Application .csproj file (located in the $ApplicationDir directory)

$CSProjFile = "$ApplicationDir.csproj"

# The site hostname of the DXC Service Application, without the protocol identifier.

$DXCSSiteHostName = "dxc-service-subscriptionname-inte.dxcloud.episerver.net"

# The name of the DXC Service Application, usually something ending in "inte", "prep" or "prod".

$DXCSApplicationName = "dxc-service-subscriptionname-inte"

# The connection string to use for the SQL Azure database"

$DBConnectionString = "<ConnectionString for Integration Environment>"

# The key used to access the Azure Blob storage container

$BlobAccountKey = "<Blob Storage Key for the Integration Environment>"

And the Magic script looks like this.

[CmdletBinding()]

param()

$ScriptDir = Split-Path -Path $MyInvocation.MyCommand.Definition -Parent

$ExitScriptNo = 4

# Ensure Environment.ps1 exists

if (-not (Test-Path "$ScriptDir\Environment.ps1")) {

Write-Host "`r`nEnvironment.ps1 could not be found, please make sure it exists. Check documentation for what it should contain." -ForegroundColor "Red"

exit

}

. $ScriptDir\Environment.ps1

if(-not (Get-Variable -Name DXCSSiteHostName -ErrorAction SilentlyContinue)){

Write-Host "`r`nEnvironment.ps1 does not contain the variable DXCSSiteHostName, please ensure it is declared and contains the correct value." -ForegroundColor "Red"

exit

}

# The path to the web application root directory

$AppLocalPath = "$ScriptDir\..\src\$ApplicationDir"

# The path to where blobs are stored in the Episerver application

$BlobLocalPath = "$AppLocalPath\App_Data\blobs"

function Get-EPiServerDBConnectionString {

param([string]$fileName)

$fileExists = Test-Path -Path $fileName

if($fileExists){

$xml = [xml](Get-Content $fileName)

return $xml.SelectSingleNode("/configuration/connectionStrings/add[@name='EPiServerDB']").connectionString

}

else {

Write-Host "Could not extract connectionstring from $fileName, exiting." -ForegroundColor "Red"

exit

}

}

function Get-IISExpress-Url {

param([string]$fileName)

$fileExists = Test-Path -Path $fileName

if($fileExists){

$xml = [xml](Get-Content $fileName)

$ns = New-Object System.Xml.XmlNamespaceManager($xml.NameTable)

$ns.AddNamespace("ns", $xml.DocumentElement.NamespaceURI)

$iisUrl = $xml.SelectSingleNode("//ns:ProjectExtensions/ns:VisualStudio/ns:FlavorProperties/ns:WebProjectProperties/ns:IISUrl", $ns).'#text'

return $iisUrl.Replace("http://", "").Replace("https://", "").TrimEnd("/")

}

else {

Write-Host "Could not extract IIS Express URL from $fileName, exiting." -ForegroundColor "Red"

exit

}

}

function Recreate-Database {

param([string]$targetServer, [string]$databaseName)

$srv = New-Object Microsoft.SqlServer.Management.Smo.Server($targetServer)

if($srv.Databases[$databaseName]){

$srv.KillAllProcesses($databaseName)

$srv.KillDatabase($databaseName)

}

$db = New-Object Microsoft.SqlServer.Management.Smo.Database($srv, $databaseName)

$db.Create()

return

}

function Update-SiteAndHostDefinitions {

param([string]$targetServer, [string]$databaseName, [string]$oldUrl, [string]$newUrl)

$query = "UPDATE tblSiteDefinition SET SiteUrl = 'http://$newUrl/' WHERE SiteUrl = 'https://$oldUrl/';UPDATE tblHostDefinition SET Name = '$newUrl' WHERE Name = '$oldUrl';"

Invoke-Sqlcmd -ServerInstance $targetServer -Query $query -Database $databaseName

}

function Delete-FormPosts {

param([string]$targetServer, [string]$databaseName)

$query = "DELETE FROM [tblBigTableReference] WHERE [pkId] IN (SELECT [pkId] FROM [tblBigTable] WHERE [StoreName] LIKE '%FormData_%');DELETE FROM [tblBigTable] WHERE [StoreName] LIKE '%FormData_%';DELETE FROM [tblBigTableIdentity] WHERE [StoreName] LIKE '%FormData_%';DELETE FROM [tblBigTableStoreInfo] WHERE [fkStoreId] IN (SELECT [pkID] FROM [tblBigTableStoreConfig] WHERE [StoreName] LIKE '%FormData_%');DELETE FROM [tblBigTableStoreConfig] WHERE [StoreName] LIKE '%FormData_%';"

Invoke-Sqlcmd -ServerInstance $targetServer -Query $query -Database $databaseName

}

# Ensure SqlPackage exists

if (-not (Test-Path $SqlPackagePath)) {

Write-Host "`r`nPlease ensure SqlPackage is installed and variable SqlPackagePath in script is correct." -ForegroundColor "Red"

exit

}

# Ensure AzCopy exists

if (-not (Test-Path $AzCopyPath)) {

Write-Host "`r`nPlease ensure AzCopy is installed and variable AzCopyPath in script is correct." -ForegroundColor "Red"

exit

}

# Ensure SqlServer PowerShell Module is installed

if (-not (Get-Module -ListAvailable -Name SqlServer)) {

Write-Host "`r`nSqlServer Powershell Module is not available, please install using command: 'Install-Module -Name SqlServer -AllowClobber'" -ForegroundColor "Red"

exit

}

# Ensure local path for BLOBs exists

if (-not (Test-Path $BlobLocalPath)) {

New-Item -ItemType directory -Path $BlobLocalPath | Out-Null

}

Import-Module SqlServer

while(-Not ($Step -eq $ExitScriptNo) -Or ($Step -eq $NULL)) {

Write-Host "`r`nAvailable options:`r`n" -ForegroundColor cyan

Write-Host "1. Copy Database from SQL Azure to instance specified in Web.config" -ForegroundColor white

Write-Host "2. Copy BLOBs from Azure Blob Storage to local machine" -ForegroundColor gray

Write-Host "3. Full restore (1 & 2)" -ForegroundColor white

Write-Host "4. Exit`r`n" -ForegroundColor gray

$Step = Read-Host "Please choose option"

if(($Step -eq 1) -Or ($Step -eq 3)) {

Write-Host "`r`nBeginning backup of SQL Azure database`r`n" -ForegroundColor yellow

$bacpacFilename = "$ScriptDir\$DatabaseName" + (Get-Date).ToString("yyyy-MM-dd-HH-mm") + ".bacpac"

& $SqlPackagePath /Action:Export /TargetFile:$bacpacFilename /SourceConnectionString:$DBConnectionString /Quiet:True

Write-Host "`r`nFinished backup of SQL Azure database`r`n" -ForegroundColor green

Write-Host "`r`nBeginning restore of SQL Azure database to instance specified in Web.config`r`n" -ForegroundColor yellow

$webConfig = "$AppLocalPath\Web.config"

$webConnectionString = Get-EPiServerDBConnectionString $webConfig

$connString = New-Object System.Data.Common.DbConnectionStringBuilder

$connString.set_ConnectionString($webConnectionString)

$targetDatabaseName = $connString["initial catalog"]

$targetServer = $connString["server"]

Recreate-Database $targetServer $targetDatabaseName

& $SqlPackagePath /Action:Import /SourceFile:$bacpacFilename /TargetDatabaseName:$targetDatabaseName /TargetServerName:$targetServer /Quiet:True

Remove-Item $bacpacFilename

Write-Host "`r`nFinished restore of SQL Azure database to instance specified in Web.config`r`n" -ForegroundColor green

Write-Host "`r`nUpdating site- and host definitions`r`n" -ForegroundColor yellow

$iisExpressUrl = Get-IISExpress-Url "$AppLocalPath\$CSProjFile"

Update-SiteAndHostDefinitions $targetServer $targetDatabaseName $DXCSSiteHostName $iisExpressUrl

Write-Host "`r`nFinished updating site- and host definitions`r`n" -ForegroundColor green

Write-Host "`r`nRemoving any stored Episerver Forms submissions`r`n" -ForegroundColor yellow

Delete-FormPosts $targetServer $targetDatabaseName

Write-Host "`r`nFinished removing Episerver Forms submissions`r`n" -ForegroundColor green

Write-Host "`r`nTouching web.config to force application pool recycle`r`n" -ForegroundColor green

(dir $webConfig).LastWriteTime = Get-Date

Write-Host "`r`nDone with step 1.`r`n" -ForegroundColor green

if(($Step -eq 1)){

Write-Host "Bye" -ForegroundColor green

exit

}

}

if(($Step -eq 2) -Or ($Step -eq 3)) {

Write-Host "`r`nBeginning copying BLOBs to local directory`r`n" -ForegroundColor yellow

& $AzCopyPath /Source:https://$DXCSApplicationName.blob.core.windows.net/blobs /Dest:$BlobLocalPath /SourceKey:$BlobAccountKey /S /MT /XO /Y

Write-Host "`r`nFinished copying BLOBs to local directory`r`n" -ForegroundColor green

Write-Host "`r`nDone with step 2.`r`n" -ForegroundColor green

if(($Step -eq 2)) {

Write-Host "Bye" -ForegroundColor green

exit

}

}

if(($Step -eq 3)) {

Write-Host "`r`nDone with step 3.`r`n" -ForegroundColor green

Write-Host "Bye" -ForegroundColor green

exit

}

if($Step -eq $ExitScriptNo) {

Write-Host "Bye" -ForegroundColor green

exit

}

if([string]::IsNullOrEmpty($Step) -Or ($Step -lt 1) -Or -Not ($Step -lt ($ExitScriptNo + 1))) {

Write-Host "`r`nPlease enter a valid option" -ForegroundColor "Red"

}

}What happens when you run it? Besides pretty colors, it does the following:

The database copy step creates a .dacpac file from the database in the integration environment, copies it to your local machine and then restores it to the database specified in the Web.config file. After it has restored the database, it ensures that the siteUrl in for the configured site matches the configured domain in the project settings in the csproj file, so that routing works locally, and then continues to delete any Episerver Forms posted form data, so that any sensitive posts won't sully your local dev environment (GDPR all the things!).

The blob file step simply copies all the blob files in the blob container and puts them in your App_Data/blobs directory.

Please note, that depending on your specific project, and how your project is set up, you might need to change or remove things. This has worked well for me, but doesn't necessarily guarantee success for you.

This process, combined with a clean CI/CD process of getting your code into the Integration environment, can greatly reduce the time spent on boring stuff like copying databases and blob files. It has shortened our startup time for new developers on the team from half a day, to about 5-10 minutes, depending on connection speed. Time that can be spent on adding value instead!

Good luck, have fun, and most importantly, don't be afraid to break things.

Nice and thanks for sharing your process for others.

You mention GDPR (forms data) and I would like to point out here that when you copy data out you are actually moving possible data that is affected by GDPR (you are data processor). Yes, you delete the data from the database after import but you have moved the data already - I think the most important part here is that if some developer is outside EU then you definetly are braking GDPR as you are moving the data outside EU (so be carefull here).

Thank you for your input Antti. There's definetly room for improvement, even though I'm sure most partners have a processor agreement with their clients (if not, they probably should), but your note on locality is something I hadn't even considered.

Maybe the script could be altered to do the deletion on the dacpac, although I have no idea on how to do that, before it is copied locally. That is something for someone else to solve, I think :)

Thanks for sharing Stephan!